Author: Thomas J. Dover, Ph.D., Federal Bureau of Investigation (FBI)

Dr. Dover serves as a crime analyst with the FBI’s Behavioral Analysis Unit and instructs on topics pertaining to behavioral analysis for the FBI National Academy.

Behavioral and Investigative Research

Behavioral research focuses on understanding, predicting, and evaluating how people interact with each other and their environment. It is a general category of research that spans the social and cognitive sciences. Investigative research is the application of behavioral research to law enforcement investigations. It focuses on interactions associated with both the crime (involving offenders, victims, and witnesses) and investigation (involving police, prosecutors, and the public). Investigative research findings can be one source of useful information that when combined with other contextual evidence can provide support for understanding a criminal event and informing investigative decision-making or practice. In general, research is systematic inquiry. It starts with a question and uses a process to find answers. This search for answers is expanded through the scientific method,which ensures the testability of initial questions and the generalizable and predictive quality of findings. Behavioral and investigative research can be actively sought out by research-savvy decision makers to address a specific problem or come to light through consultation with other law enforcement agencies or academic partners. Sometimes, investigators are actively solicited by researchers who feel their findings are relevant to an ongoing case.Where to Find Research

Behavioral and investigative research is available through several sources.- Universities produce research though faculty members; research centers; and/or ongoing partnerships with local, state, and federal agencies. The results of this research are often available through academic journals, public reports, or even project websites.

- Many federal law enforcement agencies (e.g., FBI, DHS, ATF, U.S. Marshals) have research programs dedicated to increasing the efficacy of law enforcement operations. Generally, public research reports can be found through online searches and are available through the agency or the research program directly.

- Private and nonprofit organizations will often produce research on a specific topic and then issue a report directly available from the organization and/or online.

Research Limitations

One of the best ways to evaluate a specific research effort is by recognizing its limitations, much the same way one might assess the strength of an investigation by recognizing where the case is weak. It is vital to know not only when to reject research findings but also when limitations are not grounds for rejection. Some limitations are acceptable if they are known and considered when conducting analysis and making recommendations.Critical Questions

When consuming research, it is necessary to ask critical questions. These should focus on understanding the boundaries of that research by addressing initial assumptions, the design of the research, and the interpretation of the findings. Asking critical questions provides a method of probing for limitations; some may have been accounted for by the researchers, and others may have been accidentally overlooked or purposefully obscured. Five critical questions will help decision makers determine the limitations of research and the utility of findings. While these questions are offered in the context of behavioral and investigative research, they are important to keep in mind when evaluating any type of research.- What is the purpose of the research?

- What is being asked?

- How is it being asked?

- What is important about the research findings?

- What can and cannot be done with the results?

What Is the Purpose of the Research?

Knowing why research is conducted is a significant part of understanding what drives it, as well as what may contribute to initial biases and assumptions. Understanding the purpose of research involves recognizing what type is being conducted and what it is intended to achieve. Type of Research Basic research focuses on understanding the scope of a problem. For example, collecting, coding, and collating case information to explore topics like “child abduction,” “computer hacking,” or “serial murder” is basic research. While not used to solve immediate problems, basic research is typically employed to describe a phenomenon. It can also be used to develop theories and models about a particular topic. Applied research is driven by specific questions related to particular problems. Implementing an explicit set of questions is an important part of structuring applied research goals and relating them to the real world. In an investigative context, applied research can produce findings in the form of recommendations and predictions. Both basic and applied research support each other and contribute significantly to the development of knowledge. 2 However, they have different goals, expectations for findings, and applications.Basic and Applied Research

[The following study is hypothetical and presented for illustrative purposes only.] Basic research on “child abduction” is important because it creates a foundation of knowledge that defines the scope of activities and behaviors observed in these crimes. However, descriptive statistics and findings generated from this data (e.g., the average age of an abductee) may not be useful in a specific investigation without first asking a more directed question, like:— What is the most effective neighborhood canvass strategy to employ when investigating a child abduction?

In this applied question, a hypothesis can be developed based on observations in the basic research. One such hypothesis might be:— The most effective neighborhood canvass depends on the age of the abducted child.

This is not a research finding. It is an assertion that focuses the inquiry so that researchers can effectively test the data and draw comparisons and correlations between the:- Age of an abductee.

- Use of neighborhood canvas strategies.

- Final resolution of the case.

Sponsorship Research conducted to support a decision justifies or reinforces that decision after it has been made. This approach can run the risk of producing a conflict of interest for the researcher. On the other hand, research conducted to inform a decision contributes to the ongoing calculus for a decision yet to be made. With this approach, it is the research findings, not the research purpose, that support one decision over another. Sponsorship for research can influence whether it is conducted to support or inform a decision. Understanding who is sponsoring research — through funding or other material support — will alert decision makers to possible underlying motivations for that research. Potential for conflict of interest does not mean that research is invalidated or biased. However, decision makers should be wary of research, regardless of the findings, that ignores potential conflicts or, worse yet, attempts to hide them.“ … while research is a tool that can be used to great benefit, it can also be misapplied.”

Research Sponsorship

Research conducted internally may be biased toward a specific outcome. For example:— A police department conducting an evaluation of its violent crimes unit may be motivated to show it is highly effective (in response to public criticism) or understaffed (to appeal to city council for more funding).

Likewise, research funded by an outside source with a known agenda or vested interest in the outcome is also potentially biased. For example:— Video game industry-sponsored research that investigates the influence of violent video games on teen aggression might be biased toward minimizing any correlations between the two.

Research consumers should pay close attention to how researchers deal with potential conflicts of interest and whether the findings reflect or are influenced by a presupposed position.What Is Being Asked?

When assessing research, it is important to understand how that research was conceptualized . Conceptualization involves establishing, as objectively and precisely as possible, a problem’s factors and determinants. This provides the foundation for what research questions are asked, how terms are defined, what data is used, and, ultimately, the context for generating investigative leads. Imprecise terms at the beginning of a research effort can negatively impact the construction of research methodology, interpretation of findings, and eventual recommendations. For instance, one can consider research focused on the “use of a weapon during a homicide” and some of the issues related to the key terms involved.- Frequently, “homicide” is misused to refer to what is more precisely defined as “murder,” or criminal homicide. Aside from the criminal act of killing, “homicide” also includes noncriminal acts, such as justifiable homicide, institutional killings, and killing during armed conflict. It is important to establish which concept, “homicide” or “murder,” the researchers intend to focus on and measure.

- What qualifies as a “weapon”? Does this only refer to instruments like a gun or knife, or can it include hands and feet? What about pushing a victim down a flight of stairs? Are the offender’s hands the “weapon” or are the stairs?

- Does a weapon’s “use” only relate to cause of death, or does it include the period leading up to death? If a gun is used to control a victim, but a knife is used to kill the victim, do both qualify as a weapon used “during a homicide”?

In this example, the definition of “homicide” and distinctions between “weapon” and “weapon use” can have a significant impact on what information is collected, the availability of data, and the implications of study results. Taking the time to explicitly understand core terms will often lead to valid questions about how the researchers turned them into measurable concepts and whether the metrics used make sense given the available data.“One of the best ways to evaluate a specific research effort is by recognizing its limitations, much the same way one might assess the strength of an investigation by recognizing where the case is weak.”

How Is It Being Asked?

The process of moving a research effort from conceptual factors to measuring and testing those factors is referred to as operationalization and is encapsulated in a research methodology . A methodology focuses on how to answer a research question and/or test a hypothesis. Among other things, the methodology should address who (or what) 3 is being researched and the mechanics of data collection. Population In research, there are two types of subject populations. First, there is the targetpopulation — the group about which researchers wish to draw conclusions. Second, to identify practical data collection strategies, researchers must operationalize the target population into an accessible population — those subjects reasonably available for study. For instance, a target population of “serial rapists” includes both identified and unidentified offenders. If researchers intend to collect their data through interviews, this strategy will likely preclude unidentified offenders from being subjects. 4Additionally, it may be more cost-effective, efficient, and safe to interview convicted serial rapists in prison facilities. Finally, due to mandates of ethical research, interviews can only be conducted if the subject volunteers. Therefore, although the target population is “serial rapists,” the accessible population is “convicted serial rapists in prison who have volunteered to be interviewed.” Why does this matter? The closer the accessible population matches a target population, the more useful the findings about one will be in drawing conclusions about the other. However, even if the accessible population does not match all characteristics of the target population, sometimes useful comparisons can still be drawn. For instance, research focused on the “detection of deception in suspects” might use an accessible population of “college students.” While this accessible population is significantly different from a target population of “suspects” in several ways, some of the physiological mechanisms involved in the act of lying may be universal 5 and allow for meaningful comparisons. Sample Ideally, researchers want to include every member of the accessible population as a subject. However, due to available resources, this may not be possible. Under these circumstances, a smaller, more manageable sample of the accessible population might be selected. There are numerous ways outside the scope of this article to draw samples. 6 Regardless of the technique, the goal of sampling is to select enough subjects to adequately represent the diversity of the accessible population and, thus, allow researchers to draw inferences. The gaps between target populations, accessible populations, and samples can be subject to selection bias . This category of bias occurs when the research subjects do not sufficiently represent a population. Sometimes, selection bias is unavoidable as it may have to do with the availability of subjects or their willingness to participate in the research. It may also be difficult to ascertain the representativeness of a sample if it is small or the width and breadth of variation in the accessible population is unknown.Survivor Bias

Survivor bias is a specific form of selection bias. It refers to a tendency to focus attention on success and ignore failure under the same conditions. Example 1: Prison Populations Prison populations represent investigative successes and criminal failures. However, prison populations may not sufficiently represent unidentified offenders (investigative failures and criminal successes). Research that explores detection avoidance (DA) behaviors and focuses on convicted offenders may not adequately represent DA behaviors employed by unidentified offenders. In fact, the DA behaviors used by unidentified offenders may be more effective than those used by identified offenders and explain why they remain unidentified. Example 2: Best Practices A study of police burglary units with the highest clearance rates might focus on the policies and procedures that create a successful burglary unit. The intent for this best-practices study might be to provide useful insights for other departments. However, this approach does not identify and consider other burglary units that employed the same “successful” policies and procedures but still had low clearance rates. What made units successful is important, but why other units (given the same policies and procedures) were not successful may be a key to understanding how to reproduce success and avoid failure in other departments. Researchers can address selection bias by carefully describing the sample and caveating how their methods of establishing the accessible population and sampling may impact the generalizability of research results. The merits of the research findings can then be considered by a decision maker in light of those potential limitations. Data Quality Understanding the quality of data used in research is an important part of recognizing how concepts are measured. For instance, if a researcher intends to measure the “level of violence in a city,” the number of thefts does not provide a useful, or valid, measure of the concept of “violence.” The number of homicides will provide a more valid measure of violence, but it only addresses a specific type of violent activity. The numbers of assaults, robberies, sexual assaults, and murders would be an even more valid measure because they cover a much broader concept of “violence.” Further, data that is not reliable cannot be used to make sound comparisons across data points. Data reliability is often a product of how data collection has been operationalized.Data Reliability

[The following study is hypothetical and presented for illustrative purposes only.] An applied research study focuses on “body disposal in murder” and proposes the following hypothesis:— Murder offenders hide the victim’s body to avoid being associated with the victim.

To test this hypothesis, researchers decide to code information from solved murder case files into a database. One of the concepts the researchers want to capture is whether the offender hid the victim’s body. This concept is operationalized through the following question:— In what condition did the offender leave the victim’s body?

To focus data entry, the researchers list potential answers, or “values,” to this question.- Openly displayed.

- Hidden/covered.

- Left as is.

- Undetermined/unknown.

- One coder interprets this as “hidden/covered” because the victim is covered with leaves.

- Another coder notes that it is fall and interprets this as “left as is,” attributing the victim’s body being covered with leaves to the season, not the offender’s behavior.

- Still, a third coder considers the same factors as the other two and decides to select “undetermined/unknown.”

Researchers can enhance reliability during data collection by developing a coding manual that provides explicit definitions to calibrate data entry. 7 Reliability in data coding is often measured via inter-rater reliability testing, which determines the amount of agreement or disagreement that exists between coders when interpreting the same information. This type of testing can provide insight into the coding process and give researchers an idea of what data might be subject to reliability issues. Unfortunately, these issues are commonly obfuscated in public research studies. However, if there are significant questions about how different factors were operationalized and interpreted, then it is reasonable to seek a more detailed account of the data collection and coding process by contacting the researcher(s). If researchers do not treat validity and reliability as important factors or do not adequately address questions about the reliability of data, consumers of that research should be wary.“Asking critical questions provides a method of probing for limitations; some may have been accounted for by the researchers, and others may have been accidentally overlooked or purposefully obscured.”

What Is Important About the Research Findings?

The way research findings are presented is a vital part of how they are incorporated into the decision-making process. The conveyance of the results and the interpretation of their meaning will reflect what the researchers consider important, trivial, or subject to further inquiry. Statement of Findings Research findings include the results of data analysis and the interpretation of those results. It is vital to the research process that the results are reported separately from their interpretation to ensure that a consumer can independently review the findings. Results should be reported with neutral language that, as objectively as possible, reviews outcomes. Often, these results are presented in charts, graphs, or other visualizations that make review of the information as easy as possible. Although reporting results should be objective, for sake of space or brevity, researchers may need to make decisions about what is and is not necessary to present. For this reason, it is important to be cognizant of what is included in the results, how they are framed, and what is conspicuously missing. While there may be trivial reasons as to why certain findings are not reported or emphasized, there may also be a bias or conflict of interest that has impacted these decisions.Reporting Results

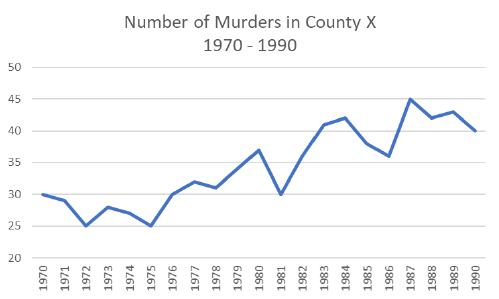

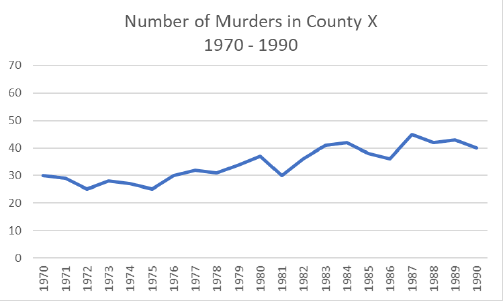

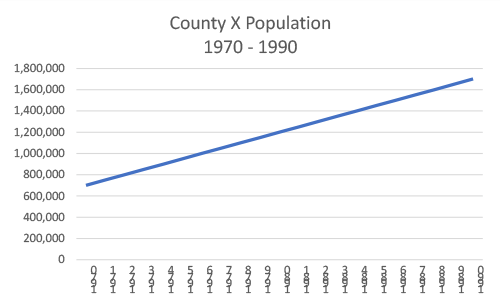

Decisions about how to present information are important. Visualizations can be manipulated in ways to affect how consumers react to them. For instance, the following graphs show the number of murders in County X (a fictitious U.S. county) from 1970 to 1990.

A B

While both graphs convey the same information, (A) makes the increase in the number of murders seem more dramatic than (B) simply because the vertical scale has been manipulated.

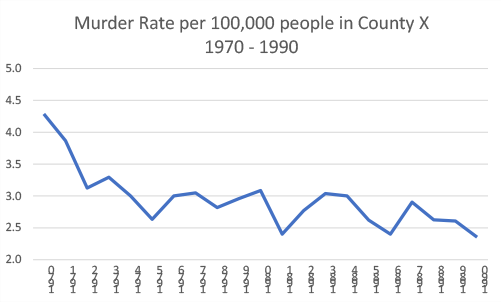

Another researcher might present the effect of County X’s population (C) on murder risk by reporting murder rate (D).

A B

While both graphs convey the same information, (A) makes the increase in the number of murders seem more dramatic than (B) simply because the vertical scale has been manipulated.

Another researcher might present the effect of County X’s population (C) on murder risk by reporting murder rate (D).

C D

This representation shows a reduction in homicide risk due to the increasing population. Essentially, while the number of murders is rising, population is increasing at a greater pace, so the homicide rate is declining.

Presenting information in different ways tells different stories for different purposes. These examples (A-D) are all factual in that they are derived from undisputed data; however, they provide different context.

C D

This representation shows a reduction in homicide risk due to the increasing population. Essentially, while the number of murders is rising, population is increasing at a greater pace, so the homicide rate is declining.

Presenting information in different ways tells different stories for different purposes. These examples (A-D) are all factual in that they are derived from undisputed data; however, they provide different context.

- If the research findings are to be applied to agency workload, then the raw number of murders (A and B) may be relevant.

- If the findings are to be applied to community risk, then the rates (D) may be more appropriate.

Comparing Research

[The following study is hypothetical and presented for illustrative purposes only. These hypothetical findings are not actionable or applicable to an actual case.] A detective interested in better understanding the use of pornography by “serial rapists” encounters two different studies on serial rapists that address pornography use with very different results.- Study A finds that 25% of serial rapists had a pornography collection.

- Study B finds that 75% of serial rapists had a pornography collection.

- Study A focuses on interviews of 34 convicted serial rapists at a maximum security facility who offended between 1980 and 1990.

- Study B focuses on serial rapists from a nationwide repository of 3,000 closed case files from 1970 to 2018.

- Study A may represent a subgroup of Study B .

- The differing methods — “interviews” versus “case file reviews” — may have had varying success in identifying the offender’s use of pornography,

- Given the accessibility of pornography on the internet, the time frames associated with the two different studies may have had an impact on its availability to the offenders.

What Can and Cannot Be Done with the Results?

The relevance of research findings in an investigation is not only a factor of conceptual and methodological construction but also the current context and needs of the investigation. In other words, the decision maker determines usefulness by weighing research limitations against moving a case forward via the practical application of the research findings. Probabilistic Inferences Investigation is probabilistic . It requires developing expectations of likelihood about people and events. For instance, identifying and pursuing a suspect is an exercise in assigning a likelihood, or probability, of culpability. Research findings tend to be expressed or understood as probabilities, often presented as percentages. These probabilities provide inferences and predictions about people and events generated from an aggregated understanding of prior people and events. To effectively incorporate research findings, a decision maker needs to understand that probabilistic inferences can inform choices of appropriate action. Frequencies, percentages, and/or probabilities may have an important role in helping an agency make strategic decisions. Yet, these same findings may not be useful in trying to understand a specific case, formulate actionable predictions during an investigation, or make reasonable determinations of risk without additional considerations.Tying Research to Investigative Purpose

[The following study is hypothetical and presented for illustrative purposes only. These hypothetical findings are not actionable or applicable to an actual case.] A young woman is strangled in her apartment. While discussing the case with a behavioral analyst, one of the detectives on the case asks,— Could this have been a female offender?

The behavioral analyst tempers his response by looking at a nationwide database of murder and nonnegligent manslaughter cases in which the offender’s sex was known:- Ten percent of the cases involved a female offender.

- Seven percent of cases with a female offender involved strangulation (less than 1% of all cases).

- Nineteen percent of strangulation cases involved a female offender.

— Under certain circumstances, the potential for a female offender using strangulation may be more likely …

and then asking a series of diagnostic questions to determine if those circumstances exist in the current investigative context. For example, if there is a potential female suspect, would she have had the ability and opportunity to physically subdue this specific victim? Diagnosticity Probability-based knowledge does not apply to specific instances but rather to general tendencies and trends. 10 Consequently, there is no way to know if a specific case involves an exception or outlier to research-derived inferences without further diagnostic questions. These are critical and testable inquiries that prune possibilities and provide the most likely answer(s) to a problem given current information. Probability-based knowledge should not be regarded as a fact pattern to direct decision-making but rather to formulate useful diagnostic questions, the answers to which further inform decision-making.Diagnosticity in Investigation

[The following study is hypothetical and presented for illustrative purposes only. These hypothetical findings are not actionable or applicable to an actual case.] A detective is investigating a burglary and notes that the offender ate food at the scene. The detective develops a theory:— The offender is homeless.

However, to avoid letting personal experience bias the investigation, the detective finds a study with a sample of 500 resolved single-offender burglaries. In 44% of the burglaries in the study, the offender ate food at the scene. In those burglaries,- Forty-eight percent of the offenders were homeless, and 52% were not homeless.

- Seventy-three percent were committed by juveniles and 27% by adults.

- Of the juveniles, 32% were homeless, and 68% were not homeless.

- Of the adults, 92% were homeless, and 8% were not homeless.

Conclusion

There are many different factors to consider when evaluating the usefulness of research for investigative decision-making. The key is to start from the premise that every research effort has flaws. It is in a decision maker’s best interest to know what those flaws are and how they can impact findings. The five questions outlined in this article are not an exhaustive list of considerations, but they provide a good place to start. Understanding the purpose of a research effort, what the researchers are asking, and how they are asking it are fundamental components of seeing how that research came to be and the methods used to execute it. Frequently, this is where initial flaws in a research effort can be identified. Recognizing how those limitations impact the findings, recommendations, and eventual application of research is a vital part of responsible research consumption. Most important, research involves simplification and error, but it can still be immensely useful. It is in a decision maker’s best interest to be open to research but also keenly aware that research, like any other tool, must be evaluated in terms of the current problem being addressed. Ultimately, investigative decision makers need to treat the consumption of research the same way they might treat an investigation — remain skeptical, ask a lot of questions, and, in the end, let evidence shape how to proceed.“Ultimately, investigative decision makers need to treat the consumption of research the same way they might treat an investigation — remain skeptical, ask a lot of questions, and, in the end, let evidence shape how to proceed.”

Dr. Dover serves as a crime analyst with the FBI’s Behavioral Analysis Unit and instructs on topics pertaining to behavioral analysis for the FBI National Academy.